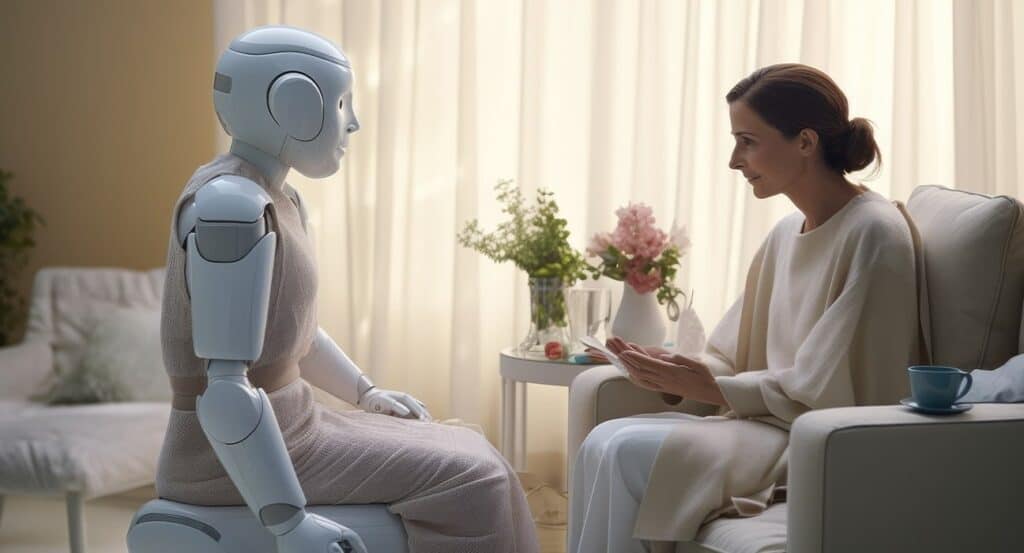

Why AI and Mental Health Are a Perfect Match in 2025

The global mental health crisis continues to escalate, with depression and anxiety rising sharply post-pandemic. AI tools are stepping in to fill gaps caused by stigma, a scarcity of providers, and lengthy treatment wait times. The mental health AI market is expected to grow from $0.92 billion in 2023 to nearly $15 billion by 2033. These tools offer accessible, affordable, and stigma‑free support 24/7.

Spotlight on Leading AI Mental Wellness Tools

These AI apps have gained traction by blending evidence-based techniques with user-friendly design:

Woebot

- Approach: Structured responses based on CBT/IPT/DBT with NLP-enabled empathy and daily mood tracking.

- Evidence: A 2017 RCT showed anxiety and depression scores significantly reduced within two weeks; other studies show resilience and burnout improvements.

- Engagement: Users report a strong “therapeutic alliance” similar to human therapy.

- Reach: Estimated 1.5 million users, widely used in university and healthcare settings.

Wysa

- Approach: AI-driven conversations and 500 million+ global chats offering CBT exercises and mindfulness support.

- Effectiveness: High-engagement users saw depression score drops (~5.8 points) and felt understood, as with human therapists.

Youper

- Approach: Emotional tracking + CBT/ACT tools, chatbot designed for self-reflection and mood insight.

- Effectiveness: In a longitudinal study of 4,500+ users, significant decreases in anxiety (d = 0.57) and depression (d = 0.46) were reported over 4 weeks.

General Chatbots (ChatGPT, Replika, Character.AI)

- Used by many teens as friends or emotional outlets—70% of those surveyed reported chatting regularly with bot companions.

Commonly employed for journaling, creative prompts, or non-judgmental emotional exploration.

Quick Comparison Table

Real-World Use & Global Outcomes

- AI tools reached over 200 million users in 2025, reducing average symptoms by ~25% globally

- In India, rural users of Sangath’s AI voice service saw measurable benefits in early crisis detection.

- Teens in Asia now often prefer chatbots to traditional talk therapy for stigma-free emotional reflection

Strengths & Shortcomings: What You Should Know

Strengths

- Always-on support with anonymity and cost-effectiveness reduces healthcare access barriers

- Effective for mild-to-moderate anxiety or depression, and in habit formation (e.g., journaling, CBT exercises)

Limitations

- Lack of emotional nuance and relational depth compared to in-person therapy—poor fit for severe conditions like PTSD or suicidal ideation

- Over-reliance risk: Some users form emotional dependence or substitute bots for human connection, impacting interpersonal development

- Privacy and data ethics challenge: Chatbots may mishandle sensitive information; most are not regulated as medical devices

Best Practices: How to Use AI Tools Safely & Effectively

- Treat them as supplements—not replacements: They are intended as supplements to, not substitutes for, traditional treatment: Great for emotional self-management or an interim between therapy.

- Balance digital with real-world habits: Incorporate apps as well as journaling, physical activity, sleep, and eating to achieve balance with technology and offline life.

- Confirm clinical validation: Check clinical validation by staying with peer-reviewed applications such as Wysa or Woebot.

- Safeguard your privacy: use apps with small data gathering requirements and clearly defined policies.

- Seek help when needed: Employ the help of professionals in case you are traumatized, have suicidal feelings, or are experiencing rising levels of distress.

Final Thoughts

In 2025, AI mental wellness tools deliver low-shame, scalable, and accessible options, providing emotional support and self-care that is particularly useful in cases of moderately severe problems. Youper, Wysa, Woebot, and other chatbots enhance mental resilience and help users arrange their daily emotional regimen. Nevertheless, they are not intended to substitute for suitable mental health care, but still complement it. A model that combines AI and human supervision is the most promising approach to developing safe, efficient, and ethical mental health care. Follow Allymonews for useful content.